- Links on the web count as votes. Initially, all votes are equal.

- Pages which receive more votes become more important (and rank higher.)

- More important pages cast more important votes.

But Google didn’t stop there: they innovated with anchor text, topic-modeling, content analysis, trust signals, user engagement, and more to deliver better and better results.

Links are no longer equal. Not by a long shot.

Links From Popular Pages Cast More Powerful Votes

Let’s begin with a foundational principle. This concept formed the basis of Google’s original PageRank patent, and quickly help vault it to the most popular search engine in the world.

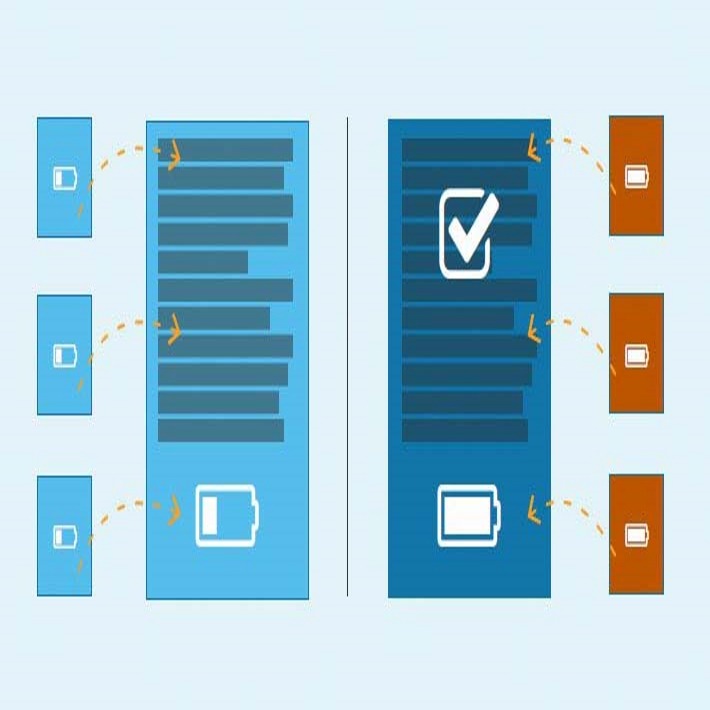

PageRank can become incredibly complex very quickly—but to oversimplify—the more votes (links) a page has pointed to it, the more PageRank (and other possible link-based signals) it accumulates. The more votes it accumulates, the more it can pass on to other pages through outbound links.

In basic terms, popular pages are ones that have accumulated a lot of votes themselves. Scoring a link from a popular page can typically be more powerful than earning a link from a page with fewer link votes.

Links “Inside” Unique Main Content Pass More Value than Boilerplate Links

Google’s Reasonable Surfer, Page Segmentation, and Boilerplate patents all suggest valuing content and links more highly if they are positioned in the unique, main text area of the page, versus sidebars, headers, and footers, aka the “boilerplate.”

It certainly makes sense, as boilerplate links are not truly editorial, but typically automatically inserted by a CMS (even if a human decided to put them there.) Google’s Quality Rater Guidelines encourage evaluators to focus on the “Main Content” of a page.

Similarly, SEO experiments have found that links hidden within expandable tabs or accordions (by either CSS or JavaScript) may carry less weight than fully visible links, though Google says they fully index and weight these links.

Links Higher Up in the Main Content Cast More Powerful Votes

If you had a choice between 2 links, which would you choose?

- One placed prominently in the first paragraph of a page, or

- One placed lower beneath several paragraphs

Of course, you’d pick the link visitors would likely click on, and Google would want to do the same. Google’s Reasonable Surfer Patent describes methods for giving more weight to links it believes people will actually click, including links placed in more prominent positions on the page.

Links With Relevant Anchor Text May Pass More Value

Also included in Google’s Reasonable Surfer patent is the concept of giving more weight to links with relevant anchor text. This is only one of several Google patents where anchor text plays an important role.

Multiple experiments over the years repeatedly confirm the power of relevant anchor text to boost a page’s ranking better than generic or non-relevant anchor text.

It’s important to note that the same Google patents that propose boosting the value of highly-relevant anchors, also discuss devaluing or even ignoring off-topic or irrelevant anchors altogether.

Not that you should spam your pages with an abundance of exact match anchors. Data typically shows that high ranking pages typically have a healthy, natural mix of relevant anchors pointing to them.

Similarly, links may carry the context of the words+phrases around/near the link. Though hard evidence is scant, this is mentioned in Google’s patents, and it makes sense that a link surrounded by topically relevant content would be more contextually relevant than the alternative.

Links from Unique Domains Matter More than Links from Previously Linking Sites

Experience shows that it’s far better to have 50 links from 50 different domains than to have 500 more links from a site that already links to you.

This makes sense, as Google’s algorithms are designed to measure popularity across the entire web and not simply popularity from a single site.

In fact, this idea has been supported by nearly every SEO ranking factor correlation study ever performed. The number of unique linking root domains is almost always a better predictor of Google rankings than a site’s raw number of total links.

Rand points out that this principle is not always universally true. “When given the option between a 2nd or 3rd link from the NYTimes vs. randomsitexyz, it’s almost always more rank-boosting and marketing helpful to go with another NYT link.”

External Links are More Influential than Internal Links

If we extend the concept from #3 above, then it follows that links from external sites should count more than internal links from your own site. The same correlation studies almost always show that high ranking sites are associated with more external links than lower ranking sites.

Search engines seem to follow the concept that what others say about you is more important than what you say about yourself.

That’s not to say that internal links don’t count. On the contrary, internal linking and good site architecture can be hugely impactful on Google rankings. That said, building external links is often the fastest way to higher rankings and more traffic.

Links From Topically Relevant Pages May Cast More Powerful Votes

You run a dairy farm. All things being equal, would you rather have a link from:

- The National Dairy Association

- The Association of Automobile Mechanics

Hopefully, you choose “a” because you recognize it’s more relevant. Though several mechanisms, Google may act in the same way to toward topically relevant links, including Topic-Sensitive PageRank, phrase-based indexing, and local inter-connectivity.

Links From Fresh Pages Can Pass More Value Than Links From Stale Pages

Freshness counts.

Google uses several ways of evaluating content based on freshness. One way to determine the relevancy of a page is to look at the freshness of the links pointing at it.

The basic concept is that pages with links from fresher pages—e.g. newer pages and those more regularly updated—are likely more relevant than pages with links from mostly stale pages, or pages that haven’t been updated in a while.

For a good read on the subject, Justin Briggs has described and named this concept FreshRank.

A page with a burst of links from fresher pages may indicate immediate relevance, compared to a page that has had the same old links for the past 10 years.In these cases, the rate of link growth and the freshness of the linking pages can have a significant influence on rankings.

It’s important to note that “old” is not the same thing as stale. A stale page is one that:

- Isn’t updated, often with outdated content

- Earns fewer new links over time

- Exhibits declining user engagement

If a page doesn’t meet these requirements, it can be considered fresh – no matter its actual age. As Rand notes, “Old crusty links can also be really valuable, especially if the page is kept up to date.”

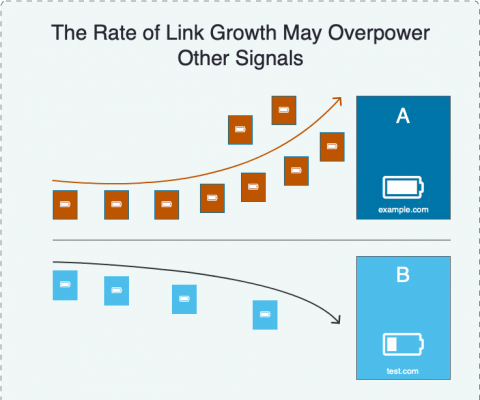

The Rate of Link Growth Can Signal Freshness

If Google sees a burst of new links to a page, this could indicate a signal of relevance.

By the same measure, a decrease in the overall rate of link growth would indicate that the page has become stale, and likely to be devalued in search results.

All of these freshness concepts, and more, are covered by Google’s Information Retrieval Based on Historical Data patent.

If a webpage sees an increase in its link growth rate, this could indicate a signal of relevance to search engines. For example, if folks start linking to your personal website because you’re about to get married, your site could be deemed more relevant and fresh (as far as this current event goes.)

Google Devalues Spam and Low-Quality Links

While there are trillions of links on the web, the truth is that Google likely ignores a large swath of them.

Google’s goal is to focus on editorial links, e.g. unique links that you don’t control, freely placed by others. Since Penguin 4.0, Google has implied that their algorithms simply ignore links that they don’t feel meet these standards. These include links generated by negative SEO and link schemes.

That said, there’s lots of debate if Google truly ignores all low-quality links, as there’s evidence that low-quality links—especially those Google might see as manipulative—may actually hurt you.

Sites Linking Out to Authoritative Content May Count More Than Those That Do Not

While Google claims that linking out to quality sites isn’t an explicit ranking factor, they’ve also made statements in the past that it can impact your search performance.

Furthermore, multiple SEO experiments and anecdotal evidence over the years suggest that linking out to relevant, authoritative sites can result in a net positive effect on rankings and visibility.

Pages That Link To Spam May Devalue The Other Links They Host

If we take the quote above and focus specifically on the first part, we understand that Google trusts sites less when they link to spam.

This concept can be extended further, as there’s ample evidence of Google demoting sites it believes to be hosting paid links, or part of a private blog network.

Basic advice: when relevant and helpful, link to authoritative sites (and avoid linking to bad sites) when it will benefit your audience.

Nofollowed Links Aren’t Followed, But May Have Value In Some Cases

Google invented the nofollow link specifically because many webmasters found it hard to prevent spammy, outbound links on their sites – especially those generated by comment spam and UGC.

A common belief is that nofollow links don’t count at all, but Google’s own language leaves some wriggle room. They don’t follow them absolutely, but “in general” and only “essentially” drop the links from their web graph.

That said, numerous SEO experiments and correlation data all suggest that nofollow links can have some value, and webmasters would be wise to maximize their value.

ManyJavaScript Links Pass Value, But Only If Google Renders Them

In the old days of SEO, it was common practice to “hide” links using JavaScript, knowing Google couldn’t crawl them.

Today, Google has gotten significantly better at crawling and rendering JavaScript, so that most JavaScript links today will count.

That said, Google still may not crawl or index every JavaScript link. For one, they need extra time and effort to render the JavaScript, and not every site delivers compatible code. Furthermore, Google only considers full links with an anchor tag and href attribute.

If A Page Links To The Same URL More Than Once, The First Link Has Priority

… Or more specifically, only the first anchor text counts.

If Google crawls a page with two or more links pointing to the same URL, they have explained that while PageRank flows normally through both, they will only use the first anchor text for ranking purposes.

This scenario often comes into play when your sitewide navigation links to an important page, and you also link to it within an article below.

Through testing, folks have discovered a number of clever ways to bypass the First Link Priority rule, but newer studies haven’t been published for several years.

Robots.txt and Meta Robots May Impact How and Whether Links Are Seen

Seems obvious, but in order for Google to weigh a link in it’s ranking algorithm, it has to be able to crawl and follow it. Unsurprisingly, there are a number of site and page-level directives which can get in Google’s way. These include:

- The URL is blocked from crawling by robots.txt

- Robots meta tag or X-Robots-Tag HTTP header use the “nofollow” directive

- The page is set to “noindex, follow” but Google eventually stops crawling

Often Google will include a URL in its search results if other pages link to it, even if that page is blocked by robots.txt. But because Google can’t actually crawl the page, any links on the page are virtually invisible.

Disavowed Links Don’t Pass Value (Typically)

If you’ve built some shady links, or been hit by a penalty, you can use Google’s disavow tool to help wipe away your sins.

By disavowing, Google effectively removes these backlinks for consideration when they crawl the web.

On the other hand, if Google thinks you’ve made a mistake with your disavow file, they may choose to ignore it entirely – probably to prevent you from self-inflicted harm.

source: MOZ